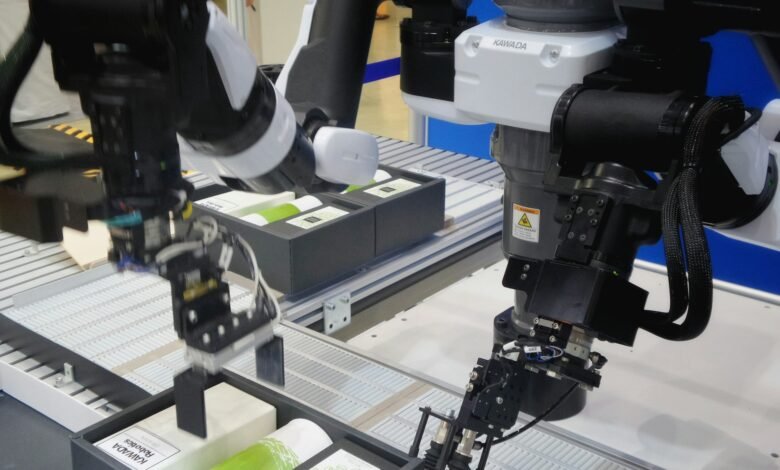

How will multimodal AI enhance collaborative robots in 2025

As we approach 2025, the integration of multimodal AI with collaborative robots (cobots) is set to revolutionize human-robot interaction and industrial automation. This synergy promises to create more intuitive, efficient, and adaptable robotic systems that can work seamlessly alongside human workers. Here’s how multimodal AI is expected to enhance collaborative robots in 2025:

Enhanced Perception and Understanding

Multimodal AI will significantly improve cobots’ ability to perceive and understand their environment and human collaborators. By integrating data from multiple sensors, including cameras, microphones, and tactile sensors, cobots will gain a more comprehensive understanding of their surroundings.

Visual Recognition and Processing

Advanced computer vision algorithms will enable cobots to recognize objects, gestures, and facial expressions with greater accuracy. This will allow them to respond more appropriately to human cues and adapt their behavior accordingly.

Natural Language Processing

Cobots equipped with natural language processing capabilities will be able to understand and respond to verbal commands, making communication between humans and robots more natural and intuitive.

Improved Decision-Making and Adaptability

Multimodal AI will enhance cobots’ decision-making capabilities, allowing them to adapt to changing conditions and tasks more effectively.

Context-Aware Task Execution

By analyzing data from multiple sources, cobots will be able to make more informed decisions about how to execute tasks. They will consider factors such as the current state of the work environment, human worker preferences, and real-time feedback.

Dynamic Task Planning

AI-powered cobots will be able to dynamically plan and adjust their actions based on changing circumstances, optimizing workflows and improving overall efficiency.

Enhanced Safety and Collaboration

Multimodal AI will play a crucial role in improving safety and collaboration between humans and cobots.

Predictive Movement

By analyzing visual and spatial data, cobots will be able to predict human movements more accurately, allowing them to adjust their actions to avoid collisions and ensure safe interaction.

Emotion Recognition

Advanced AI algorithms will enable cobots to recognize human emotions through facial expressions, voice tone, and body language. This will allow them to respond more appropriately to human workers’ emotional states, enhancing collaboration and reducing stress.

Personalized Interaction and Learning

Multimodal AI will enable cobots to personalize their interactions with individual workers and continuously learn from these interactions.

Adaptive User Interfaces

Cobots will be able to adjust their communication methods based on individual user preferences, using a combination of visual cues, voice interactions, and haptic feedback.

Improved perception: Cobots will better understand their environment by integrating data from multiple sensors (visual, auditory, tactile).

Adaptive decision-making: Cobots will make smarter, context-aware decisions by analyzing diverse data inputs.

Continuous Learning

Machine learning algorithms will allow cobots to improve their performance over time by learning from their interactions with human workers and adapting to individual working styles.

Advanced Sensor Integration

Multimodal AI will enable more sophisticated integration of various sensors, enhancing cobots’ overall capabilities.

Sensor Fusion

By combining data from multiple sensors, cobots will be able to create a more accurate and comprehensive understanding of their environment, leading to better decision-making and task execution.

IoT Integration

Cobots will be able to gather and process data from IoT devices throughout the production line, allowing them to adapt to changing conditions within the factory and optimize their performance accordingly.

Table: Multimodal AI Enhancements for Collaborative Robots in 2025

| Enhancement Area | Description | Impact |

|---|---|---|

| Perception | Integration of visual, auditory, and tactile data | Improved understanding of environment and human cues |

| Communication | Natural language processing and multimodal interfaces | More intuitive and efficient human-robot interaction |

| Decision-Making | Context-aware task execution and dynamic planning | Increased adaptability and efficiency in various scenarios |

| Safety | Predictive movement and emotion recognition | Enhanced safety and more natural collaboration with humans |

| Personalization | Adaptive interfaces and continuous learning | Improved user experience and long-term performance gains |

| Sensor Integration | Sensor fusion and IoT connectivity | More comprehensive environmental awareness and adaptability |

Conclusion

By 2025, multimodal AI will significantly enhance collaborative robots, making them more intelligent, adaptable, and easier to work with. These advancements will lead to increased productivity, improved safety, and more seamless integration of cobots in various industries. As the technology continues to evolve, we can expect to see even more innovative applications of multimodal AI in collaborative robotics, further bridging the gap between human capabilities and robotic efficiency.

FAQ: Multimodal AI and Collaborative Robots in 2025

- What is multimodal AI in the context of collaborative robots?

Multimodal AI refers to artificial intelligence systems that can process and integrate information from multiple sources or “modalities,” such as vision, audio, and touch, to enhance a cobot’s understanding and interaction capabilities. - How will multimodal AI improve communication between humans and cobots?

Multimodal AI will enable cobots to understand and respond to natural language, gestures, and facial expressions, making communication more intuitive and efficient. - Will multimodal AI-enhanced cobots be safer to work with?

Yes, these cobots will have improved ability to predict human movements, recognize emotions, and adapt their behavior accordingly, leading to safer human-robot collaboration. - How will multimodal AI affect the adaptability of cobots?

Multimodal AI will enable cobots to better understand their environment and context, allowing them to adapt more effectively to changing tasks and conditions. - Can multimodal AI-enhanced cobots learn and improve over time?

Yes, these cobots will be equipped with machine learning capabilities, allowing them to learn from interactions and improve their performance continuously. - What industries are likely to benefit most from multimodal AI in cobots?

Industries such as manufacturing, healthcare, and logistics are expected to see significant benefits from multimodal AI-enhanced cobots due to the complex and varied nature of tasks in these sectors. - How will multimodal AI impact the user interface of cobots?

Multimodal AI will enable more intuitive and personalized user interfaces, potentially incorporating voice commands, gesture recognition, and adaptive visual displays. - Will multimodal AI-enhanced cobots require extensive training to operate?

While some training will be necessary, these cobots are expected to be more intuitive to work with, potentially reducing the learning curve for human operators. - How will multimodal AI in cobots affect productivity?

By enhancing decision-making, adaptability, and human-robot collaboration, multimodal AI is expected to significantly improve overall productivity in cobot-assisted operations. - What are the potential challenges in implementing multimodal AI in cobots?

Challenges may include ensuring data privacy and security, addressing potential biases in AI algorithms, and managing the complexity of integrating multiple AI modalities effectively.